By

14 December, 2025

11 mins

The Energy Crisis of Giant Models: Why the Future of Agentic AI is Small, Specialized, and Sustainable

For the past few years, the AI industry has been locked in an arms race defined by a single metric: parameter count. We’ve been led to believe that "bigger is better"—that the path to Artificial General Intelligence (AGI) is paved with trillion-parameter models consuming small cities' worth of electricity.

But as we move deeper into 2025, that narrative is collapsing under its own weight.

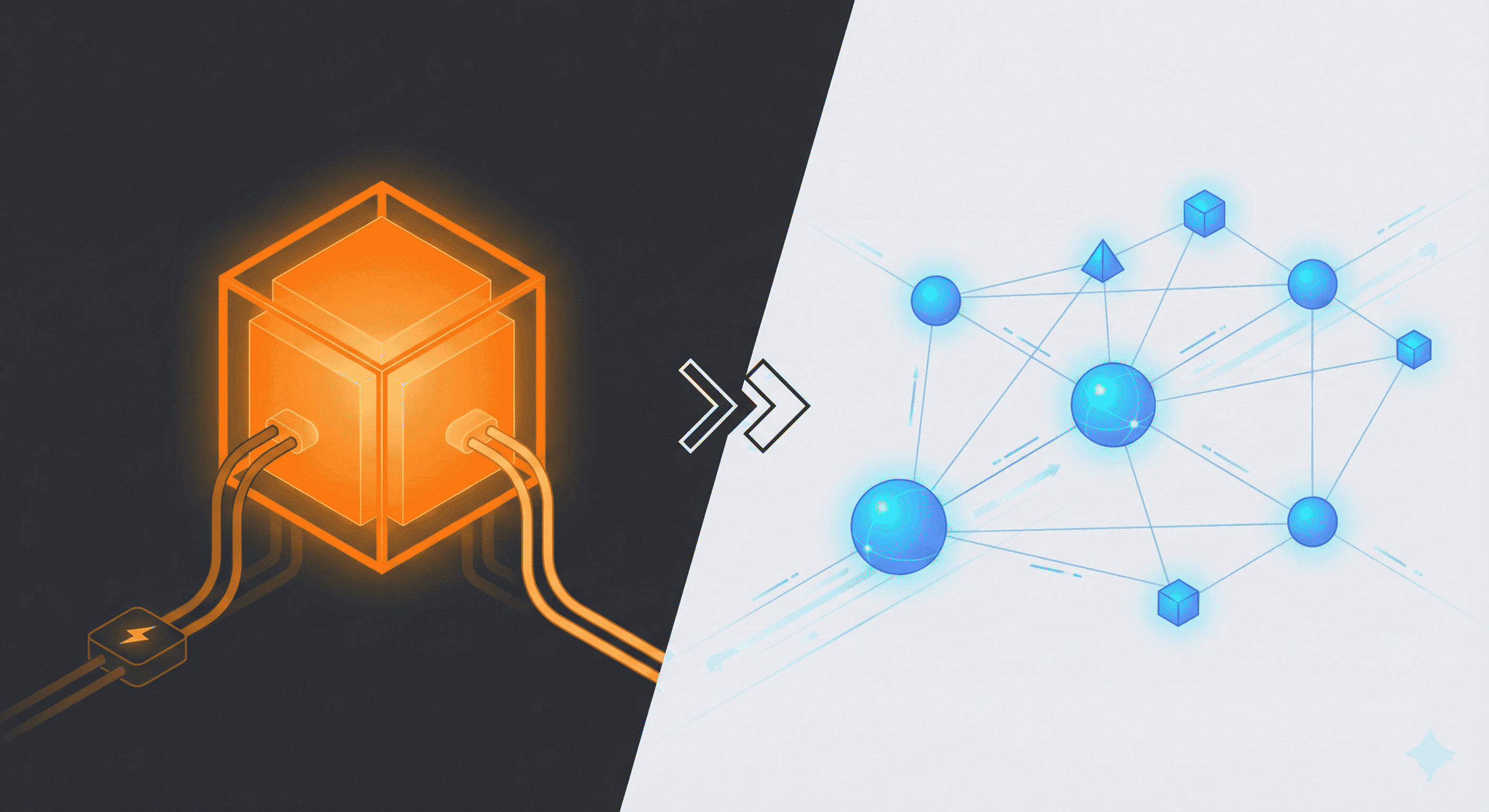

The reality is that the current trajectory of massive Large Language Models (LLMs) is unsustainable—both economically and environmentally. The next breakthrough in AI won’t come from training a larger model; it will come from orchestrating smaller, smarter, and radically more efficient ones.

At Genta, we are seeing a decisive shift away from monolithic "know-it-all" models toward specialized agentic systems. Here is why the future of enterprise AI lies in precision, not just raw scale.

The Hidden Cost of "Bigger is Better"

To understand why the shift is happening, we first have to look at the energy bill.

Training a single state-of-the-art frontier model can consume over 1,000 megawatt-hours (MWh) of electricity—roughly equivalent to the annual power consumption of 120 average U.S. homes. But training is just the tip of the iceberg. The real energy drain is inference (running the model).

Every time a user prompts a massive generalist model to do a simple task—like summarizing a meeting or extracting an invoice date—it’s like firing up a jet engine to deliver a pizza. Reports indicate that a single generative AI query can consume 10x more electricity than a standard keyword search.

For enterprises looking to deploy AI agents at scale—running thousands of automated workflows daily—relying on these massive, energy-hungry models is a financial and environmental non-starter.

The Strategic Pivot: Smaller, Smarter, Faster

Business leaders are realizing that they don’t need an AI that can write Shakespearean sonnets or solve grand philosophical debates. They need an AI that can accurately reconcile a ledger, triage a support ticket, or navigate a complex compliance workflow.

This realization is driving three fundamental shifts in how we build AI architectures.

1. The Shift: From Generalists to Specialists

This is where Small Language Models (SLMs) and specialized agents shine. Unlike their massive counterparts, SLMs (typically under 10 billion parameters) are designed for efficiency. When fine-tuned on domain-specific data, they don't just match the performance of giant models for specific tasks—they often exceed it.

They offer distinct advantages for enterprise deployment:

Lower Latency: Responses in milliseconds, not seconds, enabling real-time agent interactions.

Data Privacy: Small enough to run on-premise or within a private cloud, keeping sensitive corporate data secure.

Drastically Lower Energy Costs: Reducing the carbon footprint of automation by orders of magnitude.

2. Context is the New Gold Standard

The flaw of massive models is that they rely on parametric knowledge—facts memorized during training. But in a business setting, memorized facts are often outdated or irrelevant. What matters is context.

The future of agentic AI isn't about a model that knows everything; it’s about a system that can reason about the specific information you give it.

By moving to smaller models, we free up computational resources to focus on Context Windows and Retrieval-Augmented Generation (RAG). A specialized agent doesn't need to memorize the entire internet; it just needs to perfectly understand your company's SOPs, your customer's history, and the specific task at hand.

3. The Rise of Collaborative Agentic Systems

Perhaps the most exciting shift is the move from a single "God-mode" AI to Multi-Agent Systems.

Think of a traditional LLM as a lone genius trying to do every job in a company simultaneously. It’s impressive, but prone to burnout and hallucination. In contrast, a multi-agent architecture acts like a high-performing team. You might have:

A Researcher Agent: Scours documents for specific data points.

A Critic Agent: Reviews the data for accuracy and hallucinations.

A Writer Agent: Formats the final output for the user.

Each agent uses a smaller, optimized model tailored to its specific role. They collaborate, hand off tasks, and cross-check each other. This approach mimics human workflows, resulting in higher reliability and "reasoning" capabilities that massive monolithic models struggle to achieve consistently.

Efficiency is the Ultimate Feature

As we integrate AI into critical business workflows, the "wow factor" of 2023 is being replaced by the "ROI factor" of 2025.

The winning organizations won't be the ones with the biggest models. They will be the ones that deploy efficient, sustainable, and reliable agentic workforces. They will prioritize systems that fit seamlessly into existing workflows, consuming a fraction of the energy while delivering superior accuracy through specialization.

The era of raw scale is ending. The era of smart, sustainable integration has begun.

Ready to build efficient, high-ROI AI agents for your business? Contact Genta today to see how specialized automation can transform your operations.

We’re Here to Help

Ready to transform your operations? We're here to help. Contact us today to learn more about our innovative solutions and expert services.

We’re Here to Help

Ready to transform your operations? We're here to help. Contact us today to learn more about our innovative solutions and expert services.

We’re Here to Help

Ready to transform your operations? We're here to help. Contact us today to learn more about our innovative solutions and expert services.

By

14 December, 2025

11 mins

The Energy Crisis of Giant Models: Why the Future of Agentic AI is Small, Specialized, and Sustainable

For the past few years, the AI industry has been locked in an arms race defined by a single metric: parameter count. We’ve been led to believe that "bigger is better"—that the path to Artificial General Intelligence (AGI) is paved with trillion-parameter models consuming small cities' worth of electricity.

But as we move deeper into 2025, that narrative is collapsing under its own weight.

The reality is that the current trajectory of massive Large Language Models (LLMs) is unsustainable—both economically and environmentally. The next breakthrough in AI won’t come from training a larger model; it will come from orchestrating smaller, smarter, and radically more efficient ones.

At Genta, we are seeing a decisive shift away from monolithic "know-it-all" models toward specialized agentic systems. Here is why the future of enterprise AI lies in precision, not just raw scale.

The Hidden Cost of "Bigger is Better"

To understand why the shift is happening, we first have to look at the energy bill.

Training a single state-of-the-art frontier model can consume over 1,000 megawatt-hours (MWh) of electricity—roughly equivalent to the annual power consumption of 120 average U.S. homes. But training is just the tip of the iceberg. The real energy drain is inference (running the model).

Every time a user prompts a massive generalist model to do a simple task—like summarizing a meeting or extracting an invoice date—it’s like firing up a jet engine to deliver a pizza. Reports indicate that a single generative AI query can consume 10x more electricity than a standard keyword search.

For enterprises looking to deploy AI agents at scale—running thousands of automated workflows daily—relying on these massive, energy-hungry models is a financial and environmental non-starter.

The Strategic Pivot: Smaller, Smarter, Faster

Business leaders are realizing that they don’t need an AI that can write Shakespearean sonnets or solve grand philosophical debates. They need an AI that can accurately reconcile a ledger, triage a support ticket, or navigate a complex compliance workflow.

This realization is driving three fundamental shifts in how we build AI architectures.

1. The Shift: From Generalists to Specialists

This is where Small Language Models (SLMs) and specialized agents shine. Unlike their massive counterparts, SLMs (typically under 10 billion parameters) are designed for efficiency. When fine-tuned on domain-specific data, they don't just match the performance of giant models for specific tasks—they often exceed it.

They offer distinct advantages for enterprise deployment:

Lower Latency: Responses in milliseconds, not seconds, enabling real-time agent interactions.

Data Privacy: Small enough to run on-premise or within a private cloud, keeping sensitive corporate data secure.

Drastically Lower Energy Costs: Reducing the carbon footprint of automation by orders of magnitude.

2. Context is the New Gold Standard

The flaw of massive models is that they rely on parametric knowledge—facts memorized during training. But in a business setting, memorized facts are often outdated or irrelevant. What matters is context.

The future of agentic AI isn't about a model that knows everything; it’s about a system that can reason about the specific information you give it.

By moving to smaller models, we free up computational resources to focus on Context Windows and Retrieval-Augmented Generation (RAG). A specialized agent doesn't need to memorize the entire internet; it just needs to perfectly understand your company's SOPs, your customer's history, and the specific task at hand.

3. The Rise of Collaborative Agentic Systems

Perhaps the most exciting shift is the move from a single "God-mode" AI to Multi-Agent Systems.

Think of a traditional LLM as a lone genius trying to do every job in a company simultaneously. It’s impressive, but prone to burnout and hallucination. In contrast, a multi-agent architecture acts like a high-performing team. You might have:

A Researcher Agent: Scours documents for specific data points.

A Critic Agent: Reviews the data for accuracy and hallucinations.

A Writer Agent: Formats the final output for the user.

Each agent uses a smaller, optimized model tailored to its specific role. They collaborate, hand off tasks, and cross-check each other. This approach mimics human workflows, resulting in higher reliability and "reasoning" capabilities that massive monolithic models struggle to achieve consistently.

Efficiency is the Ultimate Feature

As we integrate AI into critical business workflows, the "wow factor" of 2023 is being replaced by the "ROI factor" of 2025.

The winning organizations won't be the ones with the biggest models. They will be the ones that deploy efficient, sustainable, and reliable agentic workforces. They will prioritize systems that fit seamlessly into existing workflows, consuming a fraction of the energy while delivering superior accuracy through specialization.

The era of raw scale is ending. The era of smart, sustainable integration has begun.

Ready to build efficient, high-ROI AI agents for your business? Contact Genta today to see how specialized automation can transform your operations.

We’re Here to Help

Ready to transform your operations? We're here to help. Contact us today to learn more about our innovative solutions and expert services.

We’re Here to Help

Ready to transform your operations? We're here to help. Contact us today to learn more about our innovative solutions and expert services.

We’re Here to Help

Ready to transform your operations? We're here to help. Contact us today to learn more about our innovative solutions and expert services.

By

14 December, 2025

11 mins

The Energy Crisis of Giant Models: Why the Future of Agentic AI is Small, Specialized, and Sustainable

For the past few years, the AI industry has been locked in an arms race defined by a single metric: parameter count. We’ve been led to believe that "bigger is better"—that the path to Artificial General Intelligence (AGI) is paved with trillion-parameter models consuming small cities' worth of electricity.

But as we move deeper into 2025, that narrative is collapsing under its own weight.

The reality is that the current trajectory of massive Large Language Models (LLMs) is unsustainable—both economically and environmentally. The next breakthrough in AI won’t come from training a larger model; it will come from orchestrating smaller, smarter, and radically more efficient ones.

At Genta, we are seeing a decisive shift away from monolithic "know-it-all" models toward specialized agentic systems. Here is why the future of enterprise AI lies in precision, not just raw scale.

The Hidden Cost of "Bigger is Better"

To understand why the shift is happening, we first have to look at the energy bill.

Training a single state-of-the-art frontier model can consume over 1,000 megawatt-hours (MWh) of electricity—roughly equivalent to the annual power consumption of 120 average U.S. homes. But training is just the tip of the iceberg. The real energy drain is inference (running the model).

Every time a user prompts a massive generalist model to do a simple task—like summarizing a meeting or extracting an invoice date—it’s like firing up a jet engine to deliver a pizza. Reports indicate that a single generative AI query can consume 10x more electricity than a standard keyword search.

For enterprises looking to deploy AI agents at scale—running thousands of automated workflows daily—relying on these massive, energy-hungry models is a financial and environmental non-starter.

The Strategic Pivot: Smaller, Smarter, Faster

Business leaders are realizing that they don’t need an AI that can write Shakespearean sonnets or solve grand philosophical debates. They need an AI that can accurately reconcile a ledger, triage a support ticket, or navigate a complex compliance workflow.

This realization is driving three fundamental shifts in how we build AI architectures.

1. The Shift: From Generalists to Specialists

This is where Small Language Models (SLMs) and specialized agents shine. Unlike their massive counterparts, SLMs (typically under 10 billion parameters) are designed for efficiency. When fine-tuned on domain-specific data, they don't just match the performance of giant models for specific tasks—they often exceed it.

They offer distinct advantages for enterprise deployment:

Lower Latency: Responses in milliseconds, not seconds, enabling real-time agent interactions.

Data Privacy: Small enough to run on-premise or within a private cloud, keeping sensitive corporate data secure.

Drastically Lower Energy Costs: Reducing the carbon footprint of automation by orders of magnitude.

2. Context is the New Gold Standard

The flaw of massive models is that they rely on parametric knowledge—facts memorized during training. But in a business setting, memorized facts are often outdated or irrelevant. What matters is context.

The future of agentic AI isn't about a model that knows everything; it’s about a system that can reason about the specific information you give it.

By moving to smaller models, we free up computational resources to focus on Context Windows and Retrieval-Augmented Generation (RAG). A specialized agent doesn't need to memorize the entire internet; it just needs to perfectly understand your company's SOPs, your customer's history, and the specific task at hand.

3. The Rise of Collaborative Agentic Systems

Perhaps the most exciting shift is the move from a single "God-mode" AI to Multi-Agent Systems.

Think of a traditional LLM as a lone genius trying to do every job in a company simultaneously. It’s impressive, but prone to burnout and hallucination. In contrast, a multi-agent architecture acts like a high-performing team. You might have:

A Researcher Agent: Scours documents for specific data points.

A Critic Agent: Reviews the data for accuracy and hallucinations.

A Writer Agent: Formats the final output for the user.

Each agent uses a smaller, optimized model tailored to its specific role. They collaborate, hand off tasks, and cross-check each other. This approach mimics human workflows, resulting in higher reliability and "reasoning" capabilities that massive monolithic models struggle to achieve consistently.

Efficiency is the Ultimate Feature

As we integrate AI into critical business workflows, the "wow factor" of 2023 is being replaced by the "ROI factor" of 2025.

The winning organizations won't be the ones with the biggest models. They will be the ones that deploy efficient, sustainable, and reliable agentic workforces. They will prioritize systems that fit seamlessly into existing workflows, consuming a fraction of the energy while delivering superior accuracy through specialization.

The era of raw scale is ending. The era of smart, sustainable integration has begun.

Ready to build efficient, high-ROI AI agents for your business? Contact Genta today to see how specialized automation can transform your operations.

We’re Here to Help

Ready to transform your operations? We're here to help. Contact us today to learn more about our innovative solutions and expert services.

We’re Here to Help

Ready to transform your operations? We're here to help. Contact us today to learn more about our innovative solutions and expert services.

We’re Here to Help

Ready to transform your operations? We're here to help. Contact us today to learn more about our innovative solutions and expert services.